Past Projects

SeeBetter

SeeBetter was a European collaboration of research groups from the Friedrich Miescher Institute for Biomedical Research, FMI (Basel, CH), Interuniversity Microelectronics Centre, IMEC (Leuven, BE), Imperial College (London, UK) and our group which aims to generate a new generation of silicon retinas. The work within this collaboration was split in the following way:

- At the FMI, the group of Dr. Botond Roska used genetic and physiological techniques to understand better the functional roles of the 6 major classes of retinal ganglion cell.

- At the Imperial College, the group of Dr. Konstantin Nikolic modeled mathematically and computationally the retinal vision processing from the viewpoint of biology, machine vision, and future retinal prosthetics.

- We designed and built the first high performance silicon retina with a heterogeneous array of pixels specialized for both spatial and temporal visual processing

- At IMEC, Dr. David San Segundo Bello and Dr. Koen De Munck processed our wafer for high quantum efficiency using back side illumination technology.

SeeBetter was funded by the EU an a so called "small or medium-scale focused research project (STREP)" (number 270324) in FET proactive ICT Call 6 (FP7-ICT-2009-6) in the work programme Brain-inspired ICT.

More information can be found under www.seebetter.eu

Main results

SeeBetter resulted in a large number of new fully functional DVS and DAVIS event cameras that are now disseminated by inivation.com for R&D studies. The results include the first frame+event camera (DAVIS), the first heterogenous color frame+DVS camera (CDAVIS), the first back-illuminated DAVIS, and the first sensitive DVS DAVIS (SDAVIS). The SeeBetter dedicated wafer run with TowerJazz resulted in sensors with resolutions ranging from 128x128 to 640x480 pixels.

Publications

SEEBETTER, together with VISUALISE (the followup project) resulted in a huge number of publications of sensors and their applications.

- Berner, R. (2011). Building Blocks for Event-Based Sensors. Available at: https://drive.google.com/open?id=0BzvXOhBHjRheUVpSSTV6alZleHM [Accessed November 23, 2013].

- Berner, R., Brandli, C., Yang, M., Liu, S.-C., and Delbruck, T. (2013a). A 240 x 180 120dB 10mW 12us-Latency Sparse Output Vision Sensor for Mobile Applications. in Proc. 2013 Intl. Image Sensors Workshop (Snowbird, UT, USA). Available at: http://www.imagesensors.org/Past%20Workshops/2013%20Workshop/2013%20Papers/03-1_005-delbruck_paper.pdf [Accessed September 24, 2013].

- Berner, R., Brandli, C., Yang, M., Liu, S.-C., and Delbruck, T. (2013b). A 240x180 10mW 12us Latency Sparse-Output Vision Sensor for Mobile Applications. in Proc. 2013 Symp. VLSI (Kyoto Japan), C186–C187.

- Berner, R., and Delbruck, T. (2011). Event-Based Pixel Sensitive to Changes of Color and Brightness. IEEE Transactions on Circuits and Systems I: Regular Papers 58, 1581–1590. doi:10.1109/TCSI.2011.2157770.

- Brandli, C., Berner, R., Yang, M., Liu, S. C., Villeneuva, V., and Delbruck, T. (2014a). Live demonstration: The “DAVIS” Dynamic and Active-Pixel Vision Sensor. in 2014 IEEE International Symposium on Circuits and Systems (ISCAS), 440–440. doi:10.1109/ISCAS.2014.6865163.

- Brandli, C., Berner, R., Yang, M., Liu, S.-C., and Delbruck, T. (2014b). A 240x180 130 dB 3 us Latency Global Shutter Spatiotemporal Vision Sensor. IEEE Journal of Solid-State Circuits 49, 2333–2341. doi:10.1109/JSSC.2014.2342715.

- Brandli, C., Mantel, T. A., Hutter, M., and Delbruck, T. (2014c). Adaptive Pulsed Laser Line Extraction for Terrain Reconstruction using a Dynamic Vision Sensor. Front. Neurosci. 7, 275. doi:10.3389/fnins.2013.00275.

- Brandli, C., Mantel, T., Hutter, M., Höpflinger, M., Berner, R., Siegwart, R., et al. (2013). Adaptive Pulsed Laser Line Extraction for Terrain Reconstruction using a Dynamic Vision Sensor. Front. Neurosci. 7:, 275. doi:10.3389/fnins.2013.00275.

- Brandli, C., Muller, L., and Delbruck, T. (2014d). Real-Time, High-Speed Video Decompression Using a Frame- and Event-Based DAVIS Sensor. in Proc. 2014 Intl. Symp. Circuits and Systems (ISCAS 2014) (Melbourne, Australia), 686–689. doi:10.1109/ISCAS.2014.6865228.

- Brandli, C., Strubel, J., Keller, S., Scaramuzza, D., and Delbruck, T. (2016). ELiSeD – An Event-Based Line Segment Detector. in IEEE Conf. on Event Based Communication, Control and Signal Processing 2016 (EBCCSP2016) (Krakow, Poland), (accepted).

- Delbruck, T., and Lang, M. (2013). Robotic Goalie with 3ms Reaction Time at 4% CPU Load Using Event-Based Dynamic Vision Sensor. Front. in Neuromorphic Eng. 7, 223. doi:10.3389/fnins.2013.00223.

- Delbruck, T., Linares-Barranco, B., Culurciello, E., and Posch, C. (2010). Activity-Driven, Event-Based Vision Sensors. in Proceedings of 2010 IEEE International Symposium on Circuits and Systems (ISCAS) (Paris), 2426–2429. doi:10.1109/ISCAS.2010.5537149.

- Delbruck, T., Pfeiffer, M., Juston, R., Orchard, G., Muggler, E., Linares-Barranco, A., et al. (2015). Human vs. computer slot car racing using an event and frame-based DAVIS vision sensor. in 2015 IEEE International Symposium on Circuits and Systems (ISCAS), 2409–2412. doi:10.1109/ISCAS.2015.7169170.

- Delbruck, T., Villanueva, V., and Longinotti, L. (2014). Integration of dynamic vision sensor with inertial measurement unit for electronically stabilized event-based vision. in 2014 IEEE International Symposium on Circuits and Systems (ISCAS), 2636–2639. doi:10.1109/ISCAS.2014.6865714.

- Gallman, N., and Bocherens, N. (2016). Implementation of an algorithm for electronic vision stabilization in VHDL for dynamic vision sensors.

- Katz, M. L., Nikolic, K., and Delbruck, T. (2012). Live demonstration: Behavioural emulation of event-based vision sensors. in 2012 IEEE International Symposium on Circuits and Systems (ISCAS), 736–740. doi:10.1109/ISCAS.2012.6272143.

- Lee, J. H., Delbruck, T., Pfeiffer, M., Park, P. K. J., Shin, C.-W., Ryu, H., et al. (2014). Real-Time Gesture Interface Based on Event-Driven Processing From Stereo Silicon Retinas. IEEE Transactions on Neural Networks and Learning Systems 25, 2250–2263. doi:10.1109/TNNLS.2014.2308551.

- Li, C., Brandli, C., Berner, R., Liu, H., Yang, M., Liu, S.-C., et al. (2015a). An RGBW Color VGA Rolling and Global Shutter Dynamic and Active-Pixel Vision Sensor. in 2015 International Image Sensor Workshop (IISW 2015) (Vaals, Netherlands: imagesensors.org), 393–396.

- Li, C., Brandli, C., Berner, R., Liu, H., Yang, M., Liu, S.-C., et al. (2015b). Design of an RGBW color VGA rolling and global shutter dynamic and active-pixel vision sensor. in 2015 IEEE International Symposium on Circuits and Systems (ISCAS), 718–721. doi:10.1109/ISCAS.2015.7168734.

- Linares-Barranco, A., Gomez-Rodriguez, F., Villanueva, V., Longinotti, L., and Delbruck, T. (2015). A USB3.0 FPGA event-based filtering and tracking framework for dynamic vision sensors. in 2015 IEEE International Symposium on Circuits and Systems (ISCAS), 2417–2420. doi:10.1109/ISCAS.2015.7169172.

- Liu, H., Moeys, D. P., Das, G., Neil, D., Liu, S. C., and Delbrück, T. (2016). Combined frame- and event-based detection and tracking. in 2016 IEEE International Symposium on Circuits and Systems (ISCAS), 2511–2514. doi:10.1109/ISCAS.2016.7539103.

- Liu, H., Rios-Navarro, A., Moeys, D. P., Delbruck, T., and Linares-Barranco, A. (2017). Neuromorphic Approach Sensitivity Cell Modeling and FPGA Implementation. in Artificial Neural Networks and Machine Learning -- ICANN 2017 (Alghero, Sardinia, Italy: Springer). Available at: https://drive.google.com/open?id=0BzvXOhBHjRheLWNyRUZYR1BuSnc.

- Moeys, D. P., Corradi, F., Kerr, E., Vance, P., Das, G., Neil, D., et al. (2016a). Steering a predator robot using a mixed frame/event-driven convolutional neural network. in 2016 Second International Conference on Event-based Control, Communication, and Signal Processing (EBCCSP), 1–8. doi:10.1109/EBCCSP.2016.7605233.

- Moeys, D. P., Corradi, F., Li, C., Bamford, S. A., Longinotti, L., Voigt, F. F., et al. (2017a). A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications. IEEE Transactions on Biomedical Circuits and Systems PP, 1–14. doi:10.1109/TBCAS.2017.2759783.

- Moeys, D. P., Li, C., Martel, J. N. P., Bamford, S., Longinotti, L., Motsnyi, V., et al. (2017b). Color Temporal Contrast Sensitivity in Dynamic Vision Sensors. in (Baltimore, MD, USA).

- Moeys, D. P., Linares-Barranco, A., Delbrück, T., and Rios-Navarro, A. (2016b). Retinal Ganglion Cell Software and FPGA Implementation for Object Detection and Tracking. in ISCAS 2016 (Montreal), accepted.

- Moeys, D. P., Neil, D., Corradi, F., Kerr, E., Vance, P., Das, G., et al. (2017c). Closed-Loop Predator Robot Control using a DAVIS Data-Driven Convolutional Neural Network.

- Nozaki, Y., and Delbruck, T. (2017). Temperature and Parasitic Photocurrent Effects in Dynamic Vision Sensors. IEEE Transactions on Electron Devices PP, 1–7. doi:10.1109/TED.2017.2717848.

- Posch, C., Serrano-Gotarredona, T., Linares-Barranco, B., and Delbruck, T. (2014). Retinomorphic Event-Based Vision Sensors: Bioinspired Cameras With Spiking Output. Proceedings of the IEEE 102, 1470–1484. doi:10.1109/JPROC.2014.2346153.

- Taverni, G., Moeys, D. P., Li, C., Cavaco, C., Motsnyi, V., Bello, D. S. S., et al. (2018). Front and Back Illuminated Dynamic and Active Pixel Vision Sensors Comparison. IEEE Transactions on Circuits and Systems II: Express Briefs (accepted) 65, 677–681. Available at: https://ieeexplore.ieee.org/document/8334288/?arnumber=8334288&source=authoralert.

- Taverni, G., Moeys, D. P., Voigt, F. F., Li, C., Cavaco, C., Motsnyi, V., et al. (2017). In-vivo Imaging of Neural Activity with Dynamic Vision Sensors. in 2017 IEEE Biomedical Circuits and Systems Conference (BioCAS) (Turin, Italy). Available at: https://drive.google.com/file/d/0BzvXOhBHjRheNjQ3NlY2UW9hUHc/view?usp=sharing.

- Yang, M., Liu, S. C., and Delbruck, T. (2017). Analysis of Encoding Degradation in Spiking Sensors Due to Spike Delay Variation. IEEE Transactions on Circuits and Systems I: Regular Papers 64, 145–155. doi:10.1109/TCSI.2016.2613503.

- Yang, M., Liu, S.-C., and Delbruck, T. (2014). Comparison of spike encoding schemes in asynchronous vision sensors: Modeling and design. in 2014 IEEE International Symposium on Circuits and Systems (ISCAS), 2632–2635. doi:10.1109/ISCAS.2014.6865713.

- Yang, M., Liu, S.-C., and Delbruck, T. (2015). A Dynamic Vision Sensor With 1% Temporal Contrast Sensitivity and In-Pixel Asynchronous Delta Modulator for Event Encoding. IEEE Journal of Solid-State Circuits 50, 1–12. doi:10.1109/JSSC.2015.2425886.

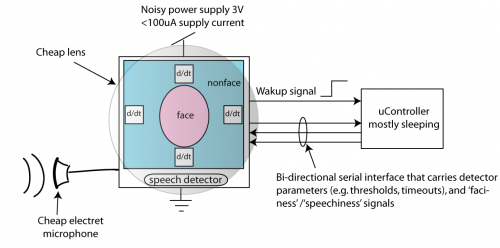

DollBrain

The MIFAVO project over the period 2007-2010 aimed to develop micropower integrated face and voice detection technology. Please contact Tobi Delbruck for questions about this project.

Motivation and Goals

Natural human-machine interaction that relies on vision and audition requires state of-the-art technology that burns tens of watts, making it impossible to run the necessary algorithms continuously under battery power. It would be desirable economically and ecologically to burn full power only when the presence of a human desiring interaction is detected. The dollbrain project takes its inspiration from nature, where interactions among species require high effort (and high energy consumption) only for limited periods of time of their engagement and at all other times, the organisms are alert for general classes of stimuli but the efforts are low and their energy is conserved.

Natural human-machine interaction that relies on vision and audition requires state of-the-art technology that burns tens of watts, making it impossible to run the necessary algorithms continuously under battery power. It would be desirable economically and ecologically to burn full power only when the presence of a human desiring interaction is detected. The dollbrain project takes its inspiration from nature, where interactions among species require high effort (and high energy consumption) only for limited periods of time of their engagement and at all other times, the organisms are alert for general classes of stimuli but the efforts are low and their energy is conserved.

We are addressing this capability by developing integrated micropower face and voice detection technology that in conjunction can provide a reliable and power-efficient wake-up cue for higher-level processing. We are investigating both algorithms and technology.

A specific goal of this project is to build a demonstrator that uses an integrated face and speech detector chip that will be developed in the project.

Further information on this project can be found on the OFFICIAL WIKI

COGAIN

COGAIN is a network of excellence on Communication by Gaze Interaction. COGAIN integrates cutting-edge expertise on interface technologies for the benefit of users with disabilities. The network gathers Europe's leading expertise in eye tracking integration with computers in a research project on assistive technologies for citizens with motor impairments. Through the integration of research activities, the network aims to develop new technologies and systems, improve existing gaze-based interaction techniques, and facilitate the implementation of systems for everyday communication.

a network of excellence on Communication by Gaze Interaction. COGAIN integrates cutting-edge expertise on interface technologies for the benefit of users with disabilities. The network gathers Europe's leading expertise in eye tracking integration with computers in a research project on assistive technologies for citizens with motor impairments. Through the integration of research activities, the network aims to develop new technologies and systems, improve existing gaze-based interaction techniques, and facilitate the implementation of systems for everyday communication.

The COGAIN project started in September 2004 as a network of excellence supported by the European Commission's IST 6th framework program. The funded project ended in August 2009. However, COGAIN continues its work in the form of an association.

New user? New to gaze interaction? Start here!

Want to get involved? See our Community page and Join The COGAIN Association!

Have questions? See the COGAIN FAQ - Frequently Asked Questions.

For further information, please visit the OFFICIAL WEBSITE

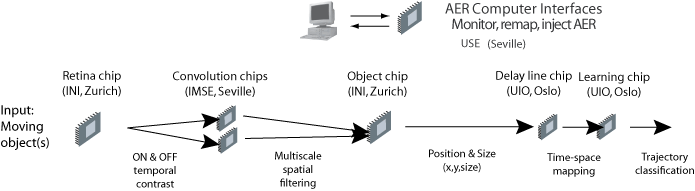

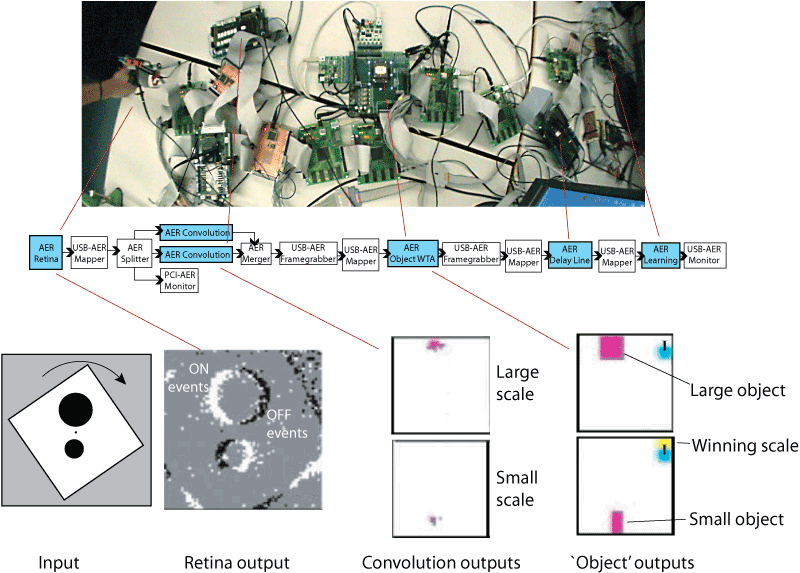

CAVIAR

CAVIAR (the coordinator's site) is a European Commission funded project to develop a multichip vision system based on Address-Event Representation (AER) communication of spike events.

Our partners in CAVIAR are

- the group of Bernabe Linares-Barranco (the project coordinator) at the Institute of Microelectronics in Sevilla,

- the group of Anton Civit at the University of Sevilla, and

- the group of Phillip Hafliger at the University of Oslo.

Shih-Chii Liu is leading INI's part of CAVIAR and works on the object chip part of the system with her student, Matthias Oster.

My student Patrick Lichtsteiner and I took over the late Jorg Kramer's part of the project, which is the developement of a dynamic vision sensor AER silicon retina.

CAVIAR components emulate parts of biological visual pathways. They compute on incoming spikes and provide outgoing spikes. Cells are connected by asynchronous digital buses carrying their spike addresses using the Addresss-Event Representation (AER).

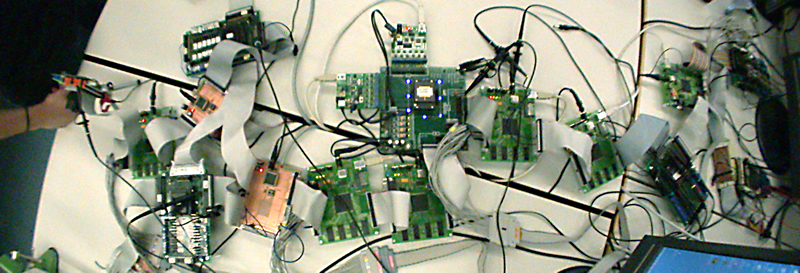

A working CAVIAR prototype asssembled in April 2005 at the CAVIAR workshop at the INI looked like this

Our characterization from the April 2005 workshop showed that stimuli of two different shapes on a rotating disk could simultaneously be discriminated and their position extracted at level of the object chip. Two convolutions chips were used, one with a large circular kernel, the other with a small circular kernel. The object chip (2-d WTA) cleaned up the convolution results and indicated at the moment the snapshot below was taken that the larger scale was winning.

From Feb 2006 at the latest workshop in Sevilla, you can play a Flash video (~10MB) showing some of the accomplishments. This video emphasizes the novel developement of a sensory-motor system using CAVIAR components. Another accomplishement was the use of a generic monitor/sequencer board to capture on one computer using multiple USB interfaces all the timestamped events from all 37,000 neurons in the entire system and to render these in real time.

Resources

Some resources for CAVIAR project members:

- Help for setting up software USB1/2 AE monitoring/display, post Sevilla workshop Feb 2006

- Help for the original SimpleMonitorUSBXPress board, including software downloads, user guide, design PCBs, firmware, etc..

Poster prepared by Tobi Delbruck and presented at NIPS 2005 meeting by Tobi Delbruck, Matthias Oster, and Shih-Chii Liu

Poster prepared by Tobi Delbruck and presented at NIPS 2005 meeting by Tobi Delbruck, Matthias Oster, and Shih-Chii Liu Poster prepared by Tobi Delbruck for Brussels review meeting 1 Dec 2003

Poster prepared by Tobi Delbruck for Brussels review meeting 1 Dec 2003

The partners have individually or collaboratively written many papers about work done on aspects of CAVIAR. So far we have only written a single collective paper on the entire project, accepted to the NIPS 2005 meeting:

R. Serrano-Gotarredona, et al., "AER Building Blocks for Multi-Layer Multi-Chip Neuromorphic Vision Systems," in Advances in Neural Information Processing Systems 18, Vancouver, 2005, pp. 1217-1224.

R. Serrano-Gotarredona, et al., "AER Building Blocks for Multi-Layer Multi-Chip Neuromorphic Vision Systems," in Advances in Neural Information Processing Systems 18, Vancouver, 2005, pp. 1217-1224.

From Spring 2005 at the INI during one of the CAVIAR assembly get-togethers.

Top row: Patrick Lichtsteiner, Tobi Delbruck, Paco Gomez-Rodriquez, Raphael Serrano-Gottoaradeno, Phillip Hafliger

Bottom row: Matthias Oster, Raphael Paz-Vicente, Shih-Chii Liu

Ada's Luminous Tactile Interactive Floor

News: full paper out in Robotics and Autonomous Systems, May 2007

(Some images © Stephan Kubli 2002)

(Some images © Stephan Kubli 2002)

As you can probably glean from the animated images above, the floor is a kind of skin for Ada -- a tactile and luminous surface. Poeople walk on it and Ada can feel where they are and communicate with them. This was a big job...

- To get an idea of what this floor does, have a look at a short video (Windows Media Format 11MB) that shows Ada waking up as people enter the exhibit and then follows a girl as she is tracked and then actively probed, using cues shown on the floor, for her willingness to interact. A longer video about Ada is linked below. Or play the Flash video below

Anyhow, to make a long story very short, Ada was built and ran successfully for a period of 5 months during the summer of 2002 with >500k visitors.

We wrote a very short paper describing the floor and its use in Ada from a technical point of view. Despite the fact that Ada interacted autonomously with over 1/2 million people during Ada's Expo.02 existence, this paper was rejected from the Interact03 conference on human-computer interaction. Probably partly because of the paper title. So here it is for your pleasure.

- T. Delbrück, A.M. Whatley, R. Douglas, K. Hepp, P.F.M.J. Verschure (2003), The mother of all disco floors.

We also tried to get this work into ICRA2005 (International Conference on Robotics and Automation) with a new and improved draft of the paper. This was also rejected, albeit marginally, and only the grounds of relevance. I suppose roboticists just don't have HAL or 2001 in mind enough to think that autonomous robotic spaces are also interesting. Here is that paper about technical aspects of the floor:

- Tobi Delbrück, Adrian M. Whatley, Rodney Douglas, Kynan Eng, Klaus Hepp and Paul F.M.J. Verschure (2004). A Tactile Luminous Floor Used as a Playful Space’s Skin.

Next Kynan Eng, Paul Verschure and I put together a video paper for this same Interact2003 meeting that gives a short overview of Ada:

- T. Delbrück, K. Eng, A. Bäbler, U. Bernardet, M. Blanchard, A. Briska, M. Costa, R. Douglas, K. Hepp, D. Klein, J. Manzolli, M. Mintz, F. Roth, U. Rutishauser, K. Wassermann, A. Wittmann, A.M. Whatley, R. Wyss and P.F.M.J. Verschure (2003) Ada: a playful interactive space, Interact 2003, Ninth IFIP TC13 International Conference on Human-Computer Interaction INTERACT'03, Sept 1-5, Zurich, Switzerland. pp 989-992.

- Video ( Windows Media Format) movie (25MB) that goes with this paper. It shows Ada's floor in many of its aspects, as well as an overview of the setting at the Swiss Expo 2002 and a few glimpses of other aspects of the exhibit

Finally, we published a full paper about the floor in 2007

- Delbruck, T., A. M. Whatley, R. Douglas, K. Eng, K. Hepp and P. F. M. J. V. Verschure (2007). "A Tactile Luminous Floor for an Interactive Autonomous Space" (needs subscription) Robotics and Autonomous Systems 55: 433-443.

Some marketing information about the floor was assembled by Gerd Dietrich and Kynan Eng during our post-Ada cooperation with Westiform. It shows specifications for the floor and some nice images.

- Westifloor rental blurb sheet. INI/Westiform blurb sheet for the Interactive Light Floor rental, specifies rental costs, constraints and capabilities (2004)

- Westiform's professional promotional material on their concept for floor uses (2004)

Standalone floor exhibition

To summarize the requirements for a standalone floor exhibition: The standalone floor now runs a self-running demo from a single Linux machine (a mini PC with PCI slot). You can make a very low-maintianance interactive turn key exhibition using some tiles, a fat wall plug (depending on how many tiles you want to light up), the PC box, and a couple of self-powered PC speakers for sound effects. A floor of 64 tiles can be set up in a new location in about a half day by 2-3 people. Depending on the size of the floor, visitors can experience a set of reactive effects and can play a variety of games:

- In Football, visitors chase and try to jump on a virtual ball that is indicated by a brightly lit white tile. The virtual ball skitters about, bouncing off the walls and the visitors and producing appropriate sound effects. Visitor collisions increase the speed of play. Successfully stomping on the ball results in a victory reward: winners are surrounded by a halo of light that grows and fades away.

- Pong splits the floor into two halves, with a virtual paddle on each half of the floor collectively controlled by the center of mass of the player locations on that half of the floor, leading to spontaneous cooperation among strangers.

- Boogie is a collective dance game or prototype automatic disco. The power spectra of the analog load information from active dancers are analyzed to extract dominant frequencies, and a consensus drives the overall rhythm and volume of the dance mix. Inactive participants who simply stand or walk about are ignored, so a few active dancers can easily dominate the rhythm of the entire space.

- Gunfight labels tracked players with lit tiles; players use "hop" gestures-where they jump to an adjacent tile-to shoot virtual bullets toward other players to extinguish them from the game. Players can use "pogo" gestures-where they jump up and land on the same tile-to temporarily surround themselves with an impenetrable shield. The surviving victor is rewarded with impressive collapsing rings of green covering the entire floor.

- In HotLava, which was inspired by a television game show, two players compete to find their way across the floor on a hidden path. If they step off the path, their half of the floor flashes an angry red, and they must start over from the beginning, while trying to remember their previous steps.

- In Squash, each tile on the floor is randomly illuminated with one of two colors. Two teams are formed, and each team is assigned a color. The teams then compete to see who can first extinguish all the tiles of their own team's color by stepping on them. (Stepping on tiles of the opponent's color only wastes time and helps the opposition.) This game is good for a battle of the sexes.

For more information about the floor, see Gerd Dietrich's page on the floor. It includes some short video clips of a small floor.

For other papers about Ada, see the official Ada exhibition pages, see the INI's Ada pages, see Kynan Eng's pages, check out my publications, or use the Google search at the top of this page,

Links

Other interactive floor projects

- Lightspace interactive luminous floor (Cambridge MA) They can sell or rent you an interactive LED floor with small tiles. Not clear what are capabilities or specifications. Makes beautiful reactive dance floor.

- MIT Media Lab Intelligent Tiles This project makes scalable smart tiles; application is not clear.

- Georgia Tech's SmartFloor (2000) They made a tactile floor (or at least a few floor tiles) intended for making rooms and buildings smarter about where people are; they studied how a person could be identified by their dynamic ground reaction force footstep load profile.

- Litefoot Homepage (1997). This tactile floor from the University of Limerick was intended for dance composition.

- MIT Media Lab: Joe Paradiso. They made the Magic Carpet tactile carpet (and many other interesting devices) and worked in Collaboration with the University of Limerick on ZTiles (ZTiles Limerick).

- MIT Media Lab: Ted Selker, They made a "Social Floor", but it is hard to get infomation on it.

- 1E Disco Dance Floor These MIT students made a splash on slashdot in 2005 with an extreme-engineering luminous disco floor. It doesn't appear to have tactile capabilities, or if it does, they are used like Lightspace's for reactive effects. (Disco's staying alive on East Campus - MIT News Office). They are now selling plans for their USB interface board (Dropout Design, LLC). Since used by these guys: Washington University IEEE Dance Floor Project for a larger disco floor.

- Intelligent Cooperative Systems Laboratory Home Page. This is the Sato/Mori lab. Hiroshi MORISHITA was lead on their Robotic Room tactile floor, which is a high resolution binary tactile floor.

Technology links

- Interbus club A useful source of information for Interbus developers.

- PhoenixContact More interbus and factory automation supplies, including the (hard to find) serial port diagnositc interface to Interbus, suitable for a very small, low reliability installation.

- Hilscher GmbH They make the Interbus PCI master card that we used (largely because it has a Linux driver).

- Interlink Electroics, FSR makers They make the FSRs (Force Sensitive Resistors) that we used in our floor tiles. This is one of two FSR sources world-wide. They are tied closely to IEE, which makes seat occupancy detection for car maker air bag deployment.

- Tekscan: Tactile Pressure Measurement, Pressure Mapping Systems, and Force Sensors and Measurement Systems They are the other source of FSRs; slightly more expensive and perhaps better suited for analog force measurement.

- Kistler - measure. analyze. innovate.Another supplier of force sensors, mostly load cells, which are based on piezoelectric crystals and are much more precise and accurate than FSRs, but also much thicker, more expensive, and more difficult electonically to interface.