NPP project

We are proud to kick off Phase 2 of the Neuromorphic Processor Project (aka NPP).

NPP is developing theory, architectures, and digital implementations targeting specific applications of deep neural network technology in vision and audition. Deep learning has become the state-of-the-art machine learning approach for sensory processing applications, and this project aims towards real-time, low-power, brain-inspired solutions targeted at full-custom SoC integration. A particular aim of the NPP is to develop efficient data-driven deep neural network architectures that can enable always-on operation on battery-powered mobile devices in conjunction with event-driven sensors.

The project team includes world-leading academic partners in the USA, Canada, and Spain. The project is coordinated by the Inst. of Neuroinformatics. The overall PI of the project is Tobi Delbruck. Shh-Chii Liu is other main NPP PI at INI.

The NPP Phase 2 partners include

- Inst. of Neuroinformatics (INI), UZH-ETH Zurich (T. Delbruck, SC Liu, G Indiveri, M Pfeiffer)

- Montreal Institute of Learning Algorithms (MILA) - Univ. of Montreal (Y Bengio)

- Robotics and Technology of Computers Lab, Univ. of Seville (A. Linares-Barranco)

- Univ. of Toronto (A Moshovos)

- Computer Lab - Cambridge Univ (R Mullins)

In Phase 1 of NPP we worked with partners from Canada, USA, and Spain to develop deep inference theory and processor architectures with state of the art power efficiency. Several key hardware accelerator results inspired by neuromorphic design principles were obtained by the Sensors group. These results exploit sparsity of neural activation in space and time to reduce computation and particularly expensive memory access to external memory, which costs hundreds of times more energy that local memory access or arithmetic operations. That way, these DNN accelerators are like synchronous spiking neural networks.

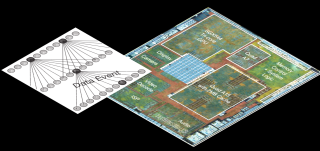

- NullHop uses spatial feature map sparsity to provide flexible convolutional neural network (CNN) acceleration that exploits the large amount of sparsity in feature maps resulting from widely-used ReLU activation functions. NullHop can achieve state of the art power efficiency of 3TOp/s/W at throughput of 500GOp/s. See the IEEE TNNLS paper (IEEE link) , video of NullHop driving CNN inference in RoShamBo, and video explaining the Rock-Scissors-Paper demo from Scientifca 2018.

- DeltaRNN uses temporal change sparsity in for recurrent neural network (RNN) acceleration that exploits the fact that most of the units in RNNs change slowly. DRNN can accelerate gated recurrent unit (GRU) RNNs by a factor of 10 or more even for single sample inference on single streams. On Xillinx Zynq FGPA, it achieves state of the art effective throughput of 1.2TOp/s at power efficiency of 164 GOp/s/W. See the ICML theory paper, the FPGA18 paper, and the first DeltaRNNv1 demo video, where DeltaRNN does real time spoken digit recognition with people having a variety of accents.

Other key results showed that

- Spiking neural networks (SNNs) can achieve equivalent accuracy as conventional analog neural networks even for very deep CNNs such as VGG16 and GoogleNet

- Both CNNs and RNNs can be trained for greatly reduced weight and state precision, resulting in huge savings in memory bandwidth.

The NPP partners in Phase 1 included

- Inst. of Neuroinformatics (INI), UZH-ETH Zurich (T. Delbruck, SC Liu, G Indiveri, M Pfeiffer)

- inilabs (F Corradi)

- Robotics and Technology of Computers Lab, Univ. of Seville (A. Linares-Barranco)

- Inst. of Microelectronics Seville (IMSE-CNM) - (B. Linares-Barranco)

- Montreal Institute of Learning Algorithms (MILA) - Univ. of Montreal (Y Bengio)

- Cornell Univ (R Manohar)

- Arizona State University (J. Seo)

Key publications from NPP phase 1 are below

- Aimar, A., Mostafa, H., Calabrese, E., Rios-Navarro, A., Tapiador-Morales, R., Lungu, I., et al. (2018). NullHop: A Flexible Convolutional Neural Network Accelerator Based on Sparse Representations of Feature Maps. IEEE Transactions on Neural Networks and Learning Systems, 1–13. doi:10.1109/TNNLS.2018.2852335.

- Gao, C., Neil, D., Ceolini, E., Liu, S.-C., and Delbruck, T. (2018). DeltaRNN: A Power-efficient Recurrent Neural Network Accelerator. in Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays FPGA ’18. (New York, NY, USA: ACM), 21–30. doi:10.1145/3174243.3174261

- J. Binas, D. Neil, S.-C. Liu, and T. Delbruck, “DDD17: End-To-End DAVIS Driving Dataset,” in ICML’17 Workshop on Machine Learning for Autonomous Vehicles (MLAV 2017), Sydney, Australia, 2017 [Online]. Available: https://openreview.net/forum?id=HkehpKVG-¬eId=HkehpKVG-

- D. Neil, J. H. Lee, T. Delbruck, and S.-C. Liu, “Delta Networks for Optimized Recurrent Network Computation,” in PMLR, 2017, pp. 2584–2593 [Online]. Available: http://proceedings.mlr.press/v70/neil17a.html. [Accessed: 14-Sep-2017]

- A. Aimar, H. Mostafa, E. Calabrese, A. Rios-Navarro, R. Tapiador-Morales, I.-A. Lungu, M. B. Milde, F. Corradi, A. Linares-Barranco, S.-C. Liu, and T. Delbruck, “NullHop: A Flexible Convolutional Neural Network Accelerator Based on Sparse Representations of Feature Maps,” arXiv:1706.01406 [cs], Jun. 2017 [Online]. Available: http://arxiv.org/abs/1706.01406. [Accessed: 03-Sep-2017]

- D. Neil, J. H. Lee, T. Delbruck, and S.-C. Liu, “Delta Networks for Optimized Recurrent Network Computation,” in accpted to ICML, 2017 [Online]. Available: https://arxiv.org/abs/1706.01406. [Accessed: 19-Dec-2016]

- D. P. Moeys and et al., “A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications,” IEEE Transactions on Biomedical Circuits and Systems, submitted 2017.

- I.-A. Lungu, F. Corradi, and T. Delbruck, “Live Demonstration: Convolutional Neural Network Driven by Dynamic Vision Sensor Playing RoShamBo,” in 2017 IEEE Symposium on Circuits and Systems (ISCAS 2017), Baltimore, MD, USA, 2017 [Online]. Available: https://drive.google.com/file/d/0BzvXOhBHjRheYjNWZGYtNFpVRkU/view?usp=sharing

- C. Gao, D. Neil, E. Ceolini, and S.-C. Liu, “DeltaRNN: A Power-efficient RNN Accelerator,” under review, 2017.

- D. Neil, J. H. Lee, T. Delbruck, and S.-C. Liu, “Delta Networks for Optimized Recurrent Network Computation,” arXiv:1612.05571 [cs], Dec. 2016 [Online]. Available: http://arxiv.org/abs/1612.05571. [Accessed: 19-Dec-2016]

- B. Rueckauer, I.-A. Lungu, Y. Hu, and M. Pfeiffer, “Theory and Tools for the Conversion of Analog to Spiking Convolutional Neural Networks,” arXiv:1612.04052 [cs, stat], Dec. 2016 [Online]. Available: http://arxiv.org/abs/1612.04052. [Accessed: 16-May-2017]

- J. H. Lee, T. Delbruck, and M. Pfeiffer, “Training Deep Spiking Neural Networks using Backpropagation,” arXiv:1608.08782 [cs], Aug. 2016 [Online]. Available: http://arxiv.org/abs/1608.08782. [Accessed: 03-Sep-2017]

- J. Ott, Z. Lin, Y. Zhang, S.-C. Liu, and Y. Bengio, “Recurrent Neural Networks With Limited Numerical Precision,” arXiv:1608.06902 [cs], Aug. 2016 [Online]. Available: http://arxiv.org/abs/1608.06902. [Accessed: 25-Aug-2016]

- J. Binas, D. Neil, G. Indiveri, S.-C. Liu, and M. Pfeiffer, “Precise deep neural network computation on imprecise low-power analog hardware,” arXiv:1606.07786 [cs], Jun. 2016 [Online]. Available: http://arxiv.org/abs/1606.07786. [Accessed: 23-Aug-2016]

- S. Braun, D. Neil, and S.-C. Liu, “A Curriculum Learning Method for Improved Noise Robustness in Automatic Speech Recognition,” arXiv:1606.06864 [cs], Jun. 2016 [Online]. Available: http://arxiv.org/abs/1606.06864. [Accessed: 16-May-2017]

- D. P. Moeys, F. Corradi, E. Kerr, P. Vance, G. Das, D. Neil, D. Kerr, and T. Delbrück, “Steering a predator robot using a mixed frame/event-driven convolutional neural network,” in 2016 Second International Conference on Event-based Control, Communication, and Signal Processing (EBCCSP), 2016, pp. 1–8.

- D. Neil and S. C. Liu, “Effective sensor fusion with event-based sensors and deep network architectures,” in 2016 IEEE International Symposium on Circuits and Systems (ISCAS), 2016, pp. 2282–2285.

- D. Neil, M. Pfeiffer, and S.-C. Liu, “Learning to Be Efficient: Algorithms for Training Low-latency, Low-compute Deep Spiking Neural Networks,” in Proceedings of the 31st Annual ACM Symposium on Applied Computing, New York, NY, USA, 2016, pp. 293–298 [Online]. Available: http://doi.acm.org/10.1145/2851613.2851724. [Accessed: 23-Aug-2016]

- T. Delbruck, “Neuromorophic Vision Sensing and Processing (Invited paper),” in 2016 European Solid-State Device Research Conf. & European Solid-State Circuits Conf. Proceedings, Lausanne, Switzerland, 2016.

- A. Aimar, E. Calabrese, H. Mostafa, A. Rios-Navarro, R. Tapiador, I.-A. Lungu, A. Jimenez-Fernandez, F. Corradi, S.-C. Liu, A. Linares-Barranco, and T. Delbruck, “Nullhop: Flexibly efficient FPGA CNN accelerator driven by DAVIS neuromorphic vision sensor,” in NIPS 2016, Barcelona, 2016 [Online]. Available: https://nips.cc/Conferences/2016/Schedule?showEvent=6317

- E. Stromatias, D. Neil, M. Pfeiffer, F. Galluppi, S. B. Furber, and S.-C. Liu, “Robustness of spiking Deep Belief Networks to noise and reduced bit precision of neuro-inspired hardware platforms,” Front Neurosci, vol. 9, Jul. 2015 [Online]. Available: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC4496577/. [Accessed: 23-Aug-2016]